Performance Fine-Tuning for MS SQL and Data Warehousing

Data Extraction Benchmark

Data extraction benchmarks for a plain table (no transformations) using well-sized network and computer resources:

MS SQL Server data transfer:

Within the same on-prem or private cloud SQL instance: avg of 50K to 1M rows/sec.

Over a 1GB+ local network: avg of 50K to 100K rows/sec.

DataSelf AWS cloud data warehouse:

Downloading (using 500MBps download speed): avg of 39K to 68K rows/sec.

Uploading (using 30MBps upload speed): avg of 9K to 14K rows/sec.

Reimports (transfers within the DB): avg 49K to 80K rows/sec.

DataSelf Azure SQL cloud data warehouse:

Downloading (using 500MBps download speed): avg of 32K to 56K rows/sec.

Uploading (using 30MBps upload speed): avg of 6K to 10K rows/sec.

Reimports (transfers within the DB): avg 7K to 13K rows/sec.

Note: SSMS data panel usually renders data slowly. Use ETL+, Excel or other tools to test speed.

OData data extraction:

Using paginated extraction (minimum impact in source system): 2K to 20K rows/minute.

Using continuous extraction (might impact the source system): 10K to 100K rows/minute.

ODBC data extraction: to be determined.

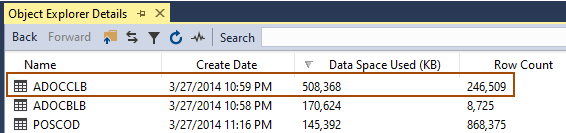

Example of performance test: the ADOCCLB table below (screenshot from SSMS) has 508MB of data space and 246K rows. If the extraction took 100 seconds to complete: rate of 2.5K rows/sec.

Recommendations

Check that the recommended system requirements have been met. More details at: System Requirements for DataSelf Analytics

Check that the data warehouse’s database(s) is using the latest SQL compatibility level available:

Open SSMS → right click the data warehouse database → Properties → Options → Compatibility Level = the latest available on this SQL instance → Ok.

If the source database is MS SQL, and it’s hosted in the same instance where the data warehouse is, be sure that both of the following parameters have exactly the same SQL instance name (do not use (local)):

ETL → Tools → SQL Server Connection → SQL Server Name

ETL → Action → Wizard → Table → Select the data source name → Edit → Server Name.

Check if there are malware software or other apps draining CPU resources. For instance:

Windows Defender is usually enabled by default and tends to drain CPU resources. Open Windows Task Manager → Details, see how much time Win Defender’s app MsMpEng.exe has been accumulating. As a general rule, we recommend either removing Windows Defender, or whitelisting MS SQL and DataSelf ETL from Win Defender.

Check that MS SQL Server (Windows Task Manager → Details → sqlserver.exe) can allocate as much CPU as needed when running intensive queries. On Windows Task Manager → Details, be sure to see columns Name, CPU (% CPU usage right now), and CPU time (accumulated CPU usage).

The SQL app should be able to allocate multiple CPU threads. For instance, on a server with 4 CPU threads, each CPU thread represents 25% of the total CPU capacity. Seeing SQL using 60% of CPU (CPU column) means it’s using 2 CPU threads and some extra from a 3rd thread.

Check that MS SQL Server (Windows Task Manager → Details → sqlserver.exe) can allocate as much RAM as needed. As a rule of thumb, the available RAM for SQL must be at least 50% bigger than the largest table by data size. For instance, if the largest data warehouse table has 8GB of data, SQL must have at least 12GB of RAM available for its tasks.

Avoid

varchar(max)when linking tables in SQL (such as in views or stored procedures).If SQL Server is remote, check if the network speed between the local computer and the SQL box is fast. SQL must render data quickly when one queries a single table with lots of data.

Set the data warehouse SQL database’s “Recovery model” set to Simple.

Set the data warehouse SQL database’s “Compatibility level” to the latest available.

If the server is still slow after the above has been addressed:

Many queries will run slowly when they link several tables. In many cases, there’s a single table/link that is causing most of the slowness. To narrow down to that table/link, take the query, remove one parent table, run the query and monitor the performance, if slow, remove another table, run the query, do this until you find which table/link makes it run fast. Once that slow table/link is found, start the test from scratch again and focus on removing that table/link as the first step and see if that table is the only factor affecting the slowness.

Add indexes to fields being used to link the largest tables.

Consider moving extensive data transformations to the table level, not at the query level.

Memory Use Info from SQL Server

SQL to extract memory usage info from MS SQL Server.

SELECT [name], [value], [value_in_use]

FROM sys.configurations

WHERE [name] = 'max server memory (MB)' OR [name] = 'min server memory (MB)';