Capturing Snapshots

Capture periodic snapshots of data, such as Open Receivables, for later reporting.

Warn the client: Not for mission-critical uses

There may be days that are missed for various other load-issue reasons. And even if we make the snapshot append process into its own Load Job so that they can watch more carefully for errors, the snapshot data still may be unrecoverable later. If they have mission-critical snapshot (or history of field changes) needs, they should get a program that works directly with their ERP.

Nightly Refresh Steps

Run the following at the end of the nightly refresh:

Have SQL view that gathers the basic needed data and adds DateTimeStamp

CONVERT(DATE, GETDATE())(which SQL gets once and shares identically with all records) to provide a clean (no time) DateStamp to use with the Replace load.(Alternatively, might want to use DT_LatestLoadStart, if the refresh starts before midnight and ends after, so that it has yesterday’s date)

(Backup process) Export this basic data view to csv with the datetime stamp as part of the file name, with auto-deletion of older exportsWe can put this process in a dedicated Job and alert the client the monitor this refresh notification more closely. This should notify most issues.Have a table that keepsappendingthese basic-data batches, potentially multiple in same day in case of refresh issues.Make a Replace table to import the day’s snapshot. Set the replace to Replace [DateStamp] > MAX(DateStamp)Have SQL view that does a GroupBy with two columns:

i. Date (from DateTimeStamp) (so one record per Date)

ii. MAX(DateTimeStamp) *

In tableau: Link the GroupBy view’s MAX(DateTimeStamp) to the append table’s DateTimeStamp

As an equal join, to eliminate extras

ORAs a left join, in case they want to report on the excluded timestamped postings

Then link the GroupBy’s Date from this view to 5_Date, so this is the date used in reporting

*To fine-tune these choices, maybe not use MAX(DateTimeStamp), there is plenty of open-box programming here to do whatever. But most of the time, the reason for a second posting in one day is because of a failure of the first one, resulting in some sort of bad data. In which case, the MAX is desired.

***********************************************

Components

Basic Data example

Open AR example for the basic needed data append table:

customerID

Invoice SlspnID

InvoiceDate

InvNumber

InvDueDate

DocType

original amount

open $

GETDATE() AS DateTimeStamp

Keeping the number of columns to a minimum helps offset the number of records that will accumulate day after day

CSV Export

Run the csv export

Run batch/powershell files that maintain it

renames the latest on to ExportName + DateTime stamp (from file datetime)

deletes older backups

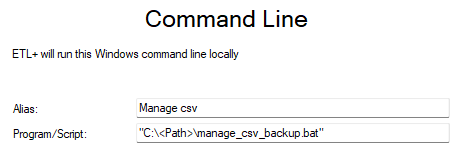

The batch file will call the PowerShell script as follows:

REM Edit the command so that:

REM -file points to manage_csv_backup.ps1

REM -TableName is the name of the exported file

REM -Path is the folder the .csv file is exported to

REM For more details, run the following command in PowerShell: Get-Help manage_csv_backup.ps1

PowerShell.exe -NoProfile -ExecutionPolicy Bypass -file "C:\<Path>\manage_csv_backup.ps1" -TableName "<schemaname.tablename>.csv" -Path "C:\<Path>\DS_Downloads" -DaysToDelete 60Unless you have Admin privileges on the machine this task runs on, you cannot run this task manually. It must be run via the Agent.

manage_csv_backup.ps1 manage_csv_backup.bat

Multi-location Jobs and Agent Locations

If this snapshot needs to run all on one box it can be in the same job. But if we need to be able to run it from any box, our ETL needs to be able to call the csv job from anywhere and run it under the correct agent.

Future ETL+ release will have ability to

run string of jobs

with diff agent for each job